Airflow in Production: A Fictional Example

This is the first article of the series “X in Production: A Fictional Example,” which aims to provide simplified examples of how a technology would be used in a real production environment. We hope this will help the reader understand what the technology is and how it’s used in a short amount of time.

What is Airflow? Airflow is a workflow scheduler created by Airbnb and currently maintained by the Apache Software foundation.

Why use a workflow scheduler? Organizations usually need to perform batch processes at certain times. In Linux they can be triggered by adding an entry to the crontab file, but once your processes get more complex and there are dependencies between tasks, a workflow scheduler can coordinate these in a simpler manner.

Competitors: Luigi, Azkaban, Pinball, Oozie.

Advantages: Maintained by Apache Software Foundation, workflows are defined programmatically in Python, simple and useful web UI.

Fictional Example

Optional: Watch the following video to see the example before reading:

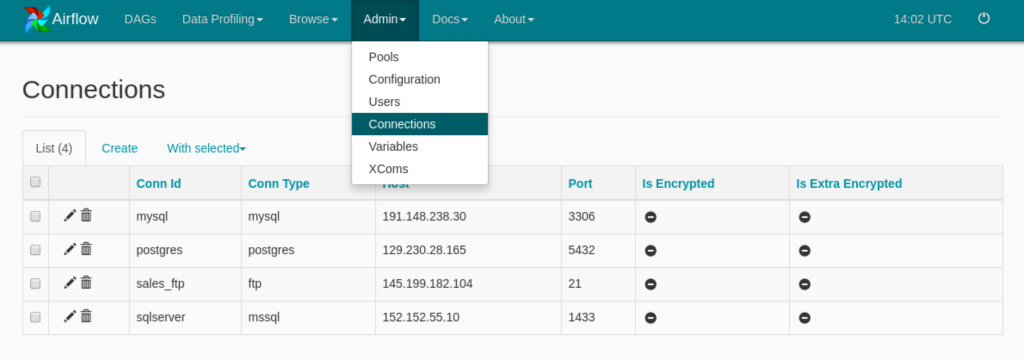

I’m Ryan Bark, lead Data Engineer at Fictional Hotels inc.. We have separate software programs for different purposes, each with their own database.

- Manage client bookings: MySQL.

- Manage contracts with agencies: PostgreSQL.

- Control client checkin and checkout times: Microsoft SQL Server.

- We also have an FTP server where our accountants frequently leave financial reports in CSV format.

In order to unify this data we have several custom batch processes that run every night, extract the data from each source and store it in our data lake where it can later be accessed or transformed and inserted into the data warehouse.

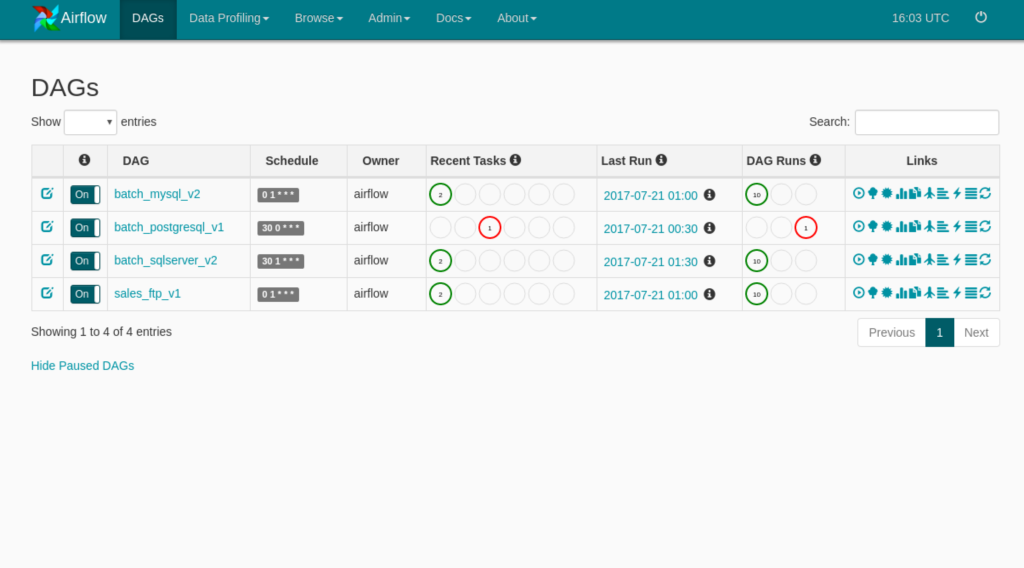

Upon getting to work in the morning, the first thing I do is check Airflow UI ‘s dashboard. Even before coffee.

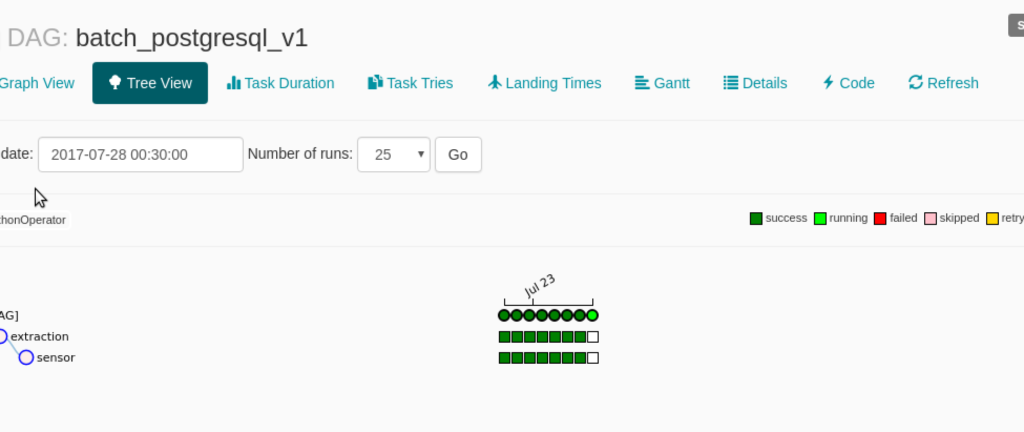

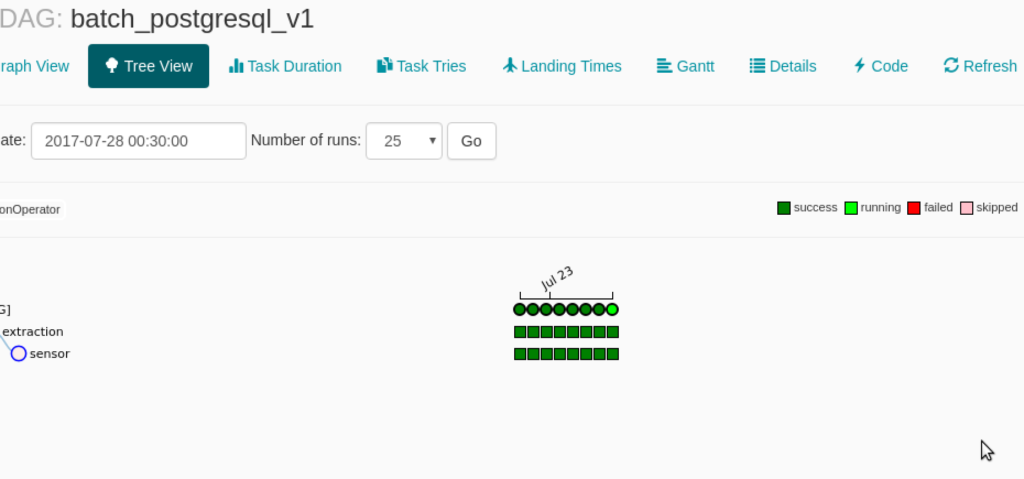

We can see that batch_postgresql_v1 has one failed task while the other DAGs have executed correctly.

Intervention

This has an error in our production environment and has high priority, so we will sort that out before we pay attention to any requests for new features.

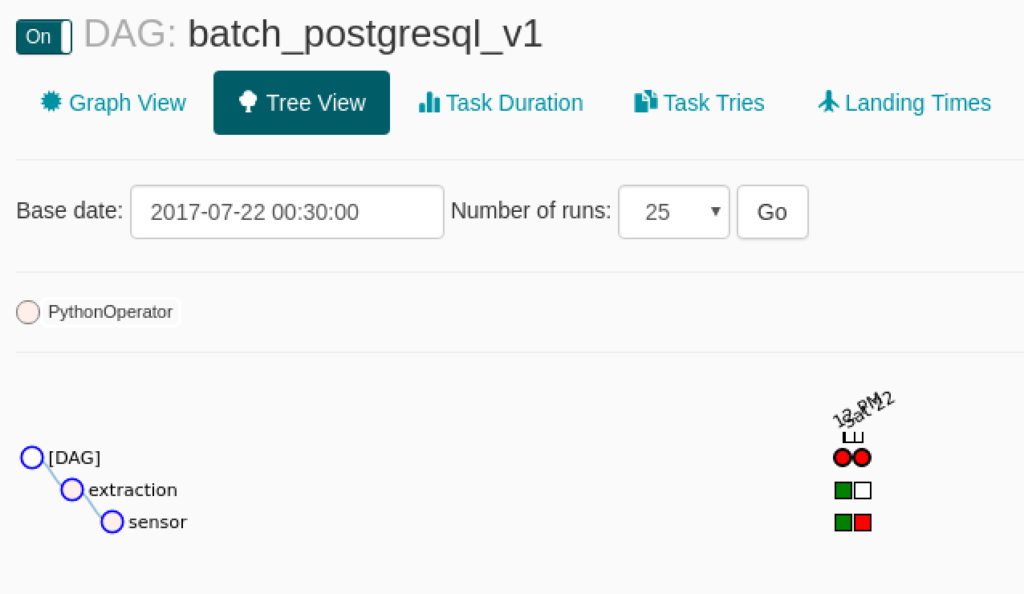

We can see that the previous execution had been successful, so let’s find out what happened.

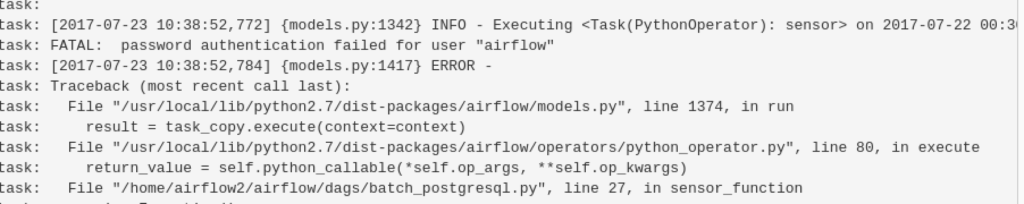

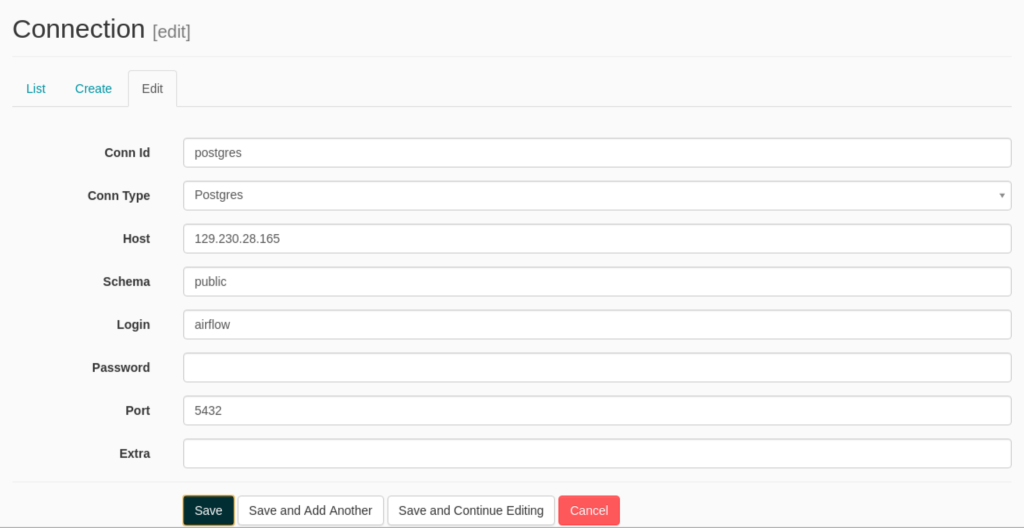

It looks like the Database Admin might have changed the password for security reasons without warning us. We will have to go talk to him to get the new password and make sure it doesn’t happen again.

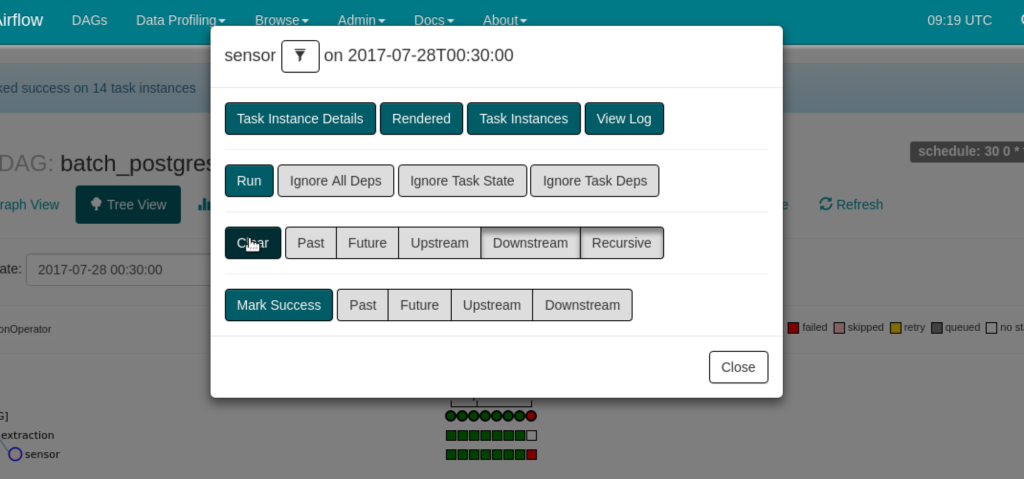

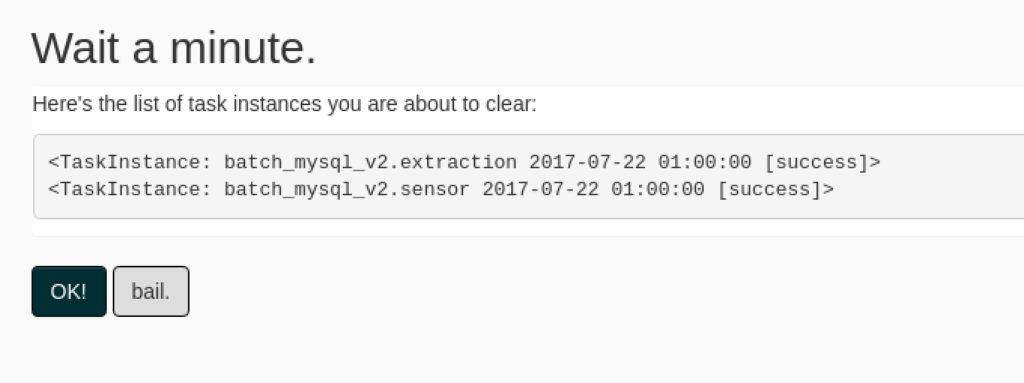

Now that we have the new password and it has been changed in the connections page, we will clear the failed execution. This will delete the execution record from Airflow’s database, so the next time the scheduler checks it will see that there is a pending execution and it will run the task.

This time the execution completes without errors, so it appears in green.

New Feature Request: Hotel Rooms information

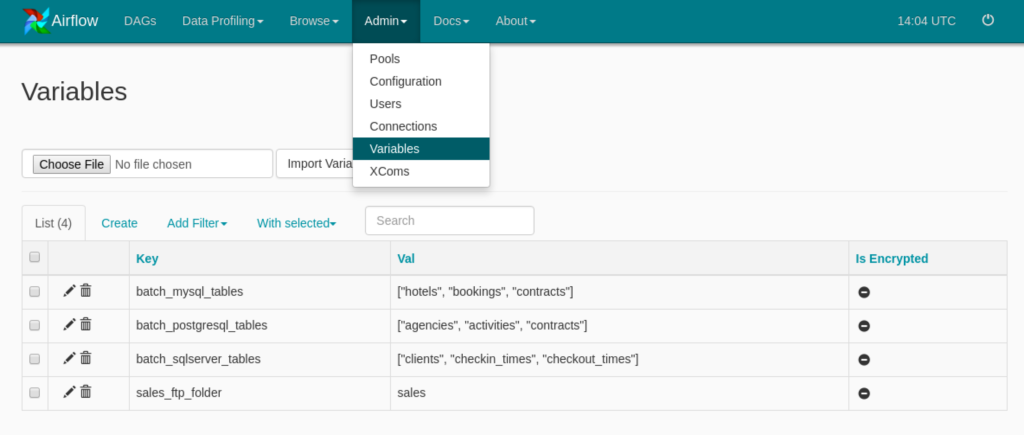

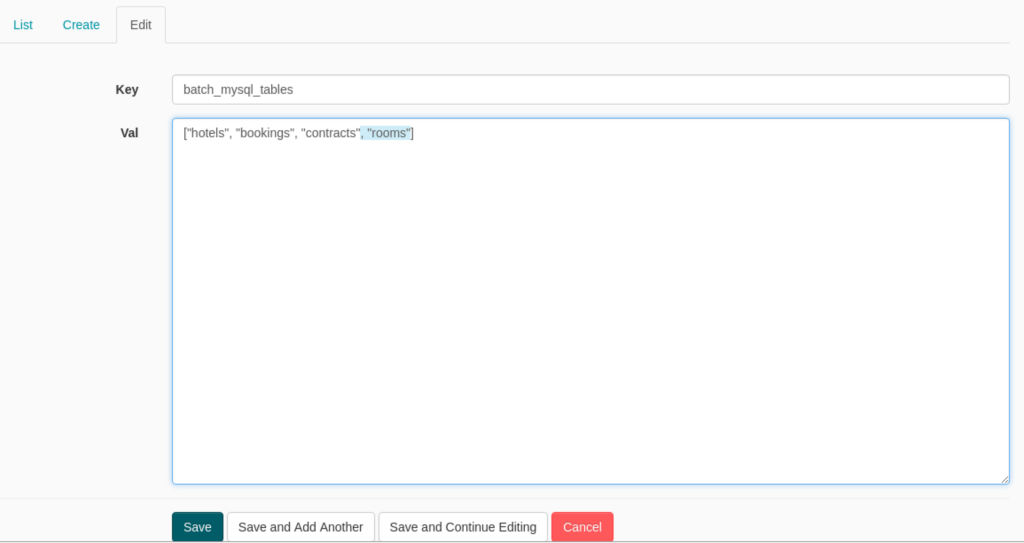

I also get an email that our accountants would like to also have information about hotel rooms (price etc.). I know that this information is located inside our MySQL database in a table called rooms. Luckily we had defined the tables to be extracted as an airflow variable, so we don’t need to modify and deploy new code, we just have to add the table to our variable.

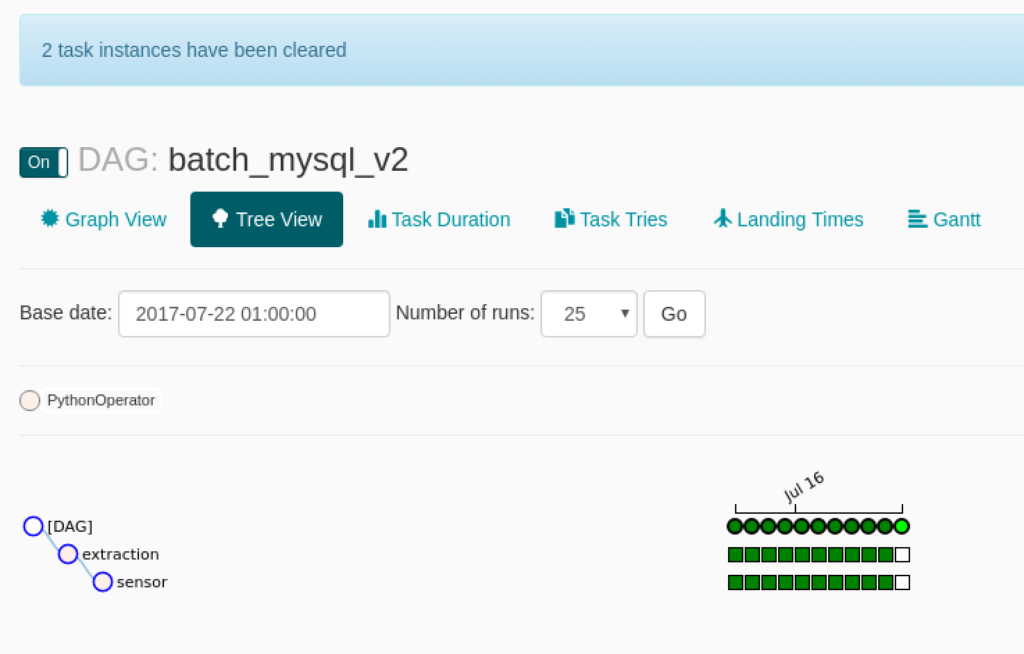

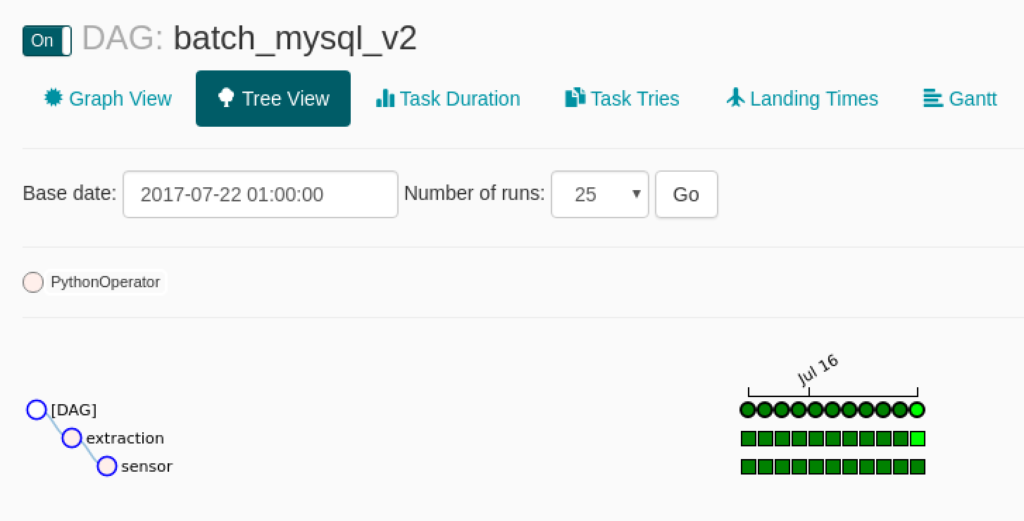

Once we have done that we will clear the tasks for the previous date so that the scheduler launches it again, like we did for Batch PostgreSQL.

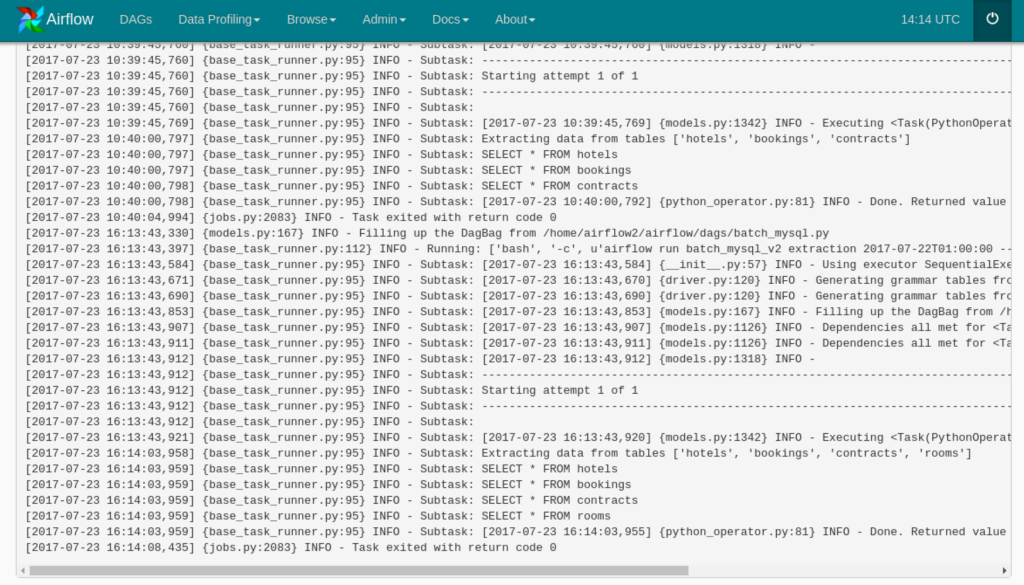

As we can see in the log, the task now also extracts data from the table “rooms”, whereas in the previous execution (which can also be seen further up in the log), it did not.

An thus our productive morning of Airflow duty comes to an end.

Parting Thoughts

We have seen that Airflow is commonly used to schedule batch processes that extract data from databases, but it can also work with FTP servers or any other data source that can be accessed with Python (so virtually any data source). It can also be used to schedule processes that transform and load data, but we have not seen that today.

We went over Airflow’s UI and how we can easily identify failed tasks by looking at its dashboard. We also saw how we can edit a connection and clear a task so that it executes them again.

Finally, we used Airflow variables to configure tasks and change their behavior without having to modify and deploy code.

This article is not meant as a tutorial on how to use Airflow, but rather give a high level overview of what it can do and what it looks like. If you are interested in learning more, subscribe to our newsletter for more Airflow articles.